Tool Shaped Objects

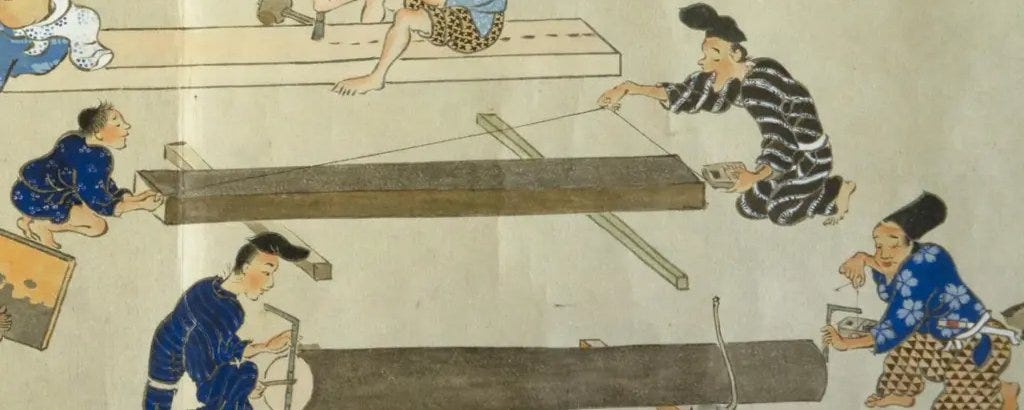

In 1711, a toolmaker in Kyoto named Chiyozuru Korehide began forging kanna blades for the carpenters building the temples at Higashi Hongan-ji. The blades were forged from laminated steal, the highest quality white hagane forge-welded to soft iron, and were extraordinary.

Three hundred years later, his descendants still forge them. A Chiyozuru kanna costs somewhere between three hundred and three thousand dollars. It takes days to set up. The dai must be hand-fitted, the blade back flattened on a series of progressively finer stones, the chipbreaker mated until light cannot pass between it and the edge. Only then can you take a shaving.

The shaving curls are transcendent. It is beautiful. It is also, in the economic sense, worthless. A power planer does the same work in a fraction of the time. The kanna exists so that the setup can exist.

I want to talk about a category of object that is shaped like a tool, but distinctly isn’t one. You can hold it. You can use it. It fits in the hand the way a tool should. It produces the feeling of work-- the friction, the labor, the sense of forward motion-- but it doesn’t produce work. The object is not broken, it is performing its function. It’s function is to feel like a tool.

This week, a slop-essay called “Something Big is Happening” reached escape velocity. 40 million people and about four hundred billion dollars of AUM have read and discussed it in fevered tones.

It was written, or perhaps more precisely generated, by Matt Shumer, the CEO of an LLM startup that I couldn’t immediately parse the function of from its various landing pages.

What is interesting is not that the essay is slop. What is interesting is that people consumed it. They shared it. They engaged with it. They performed the act of reading and distributing an essay about artificial intelligence that was itself produced by artificial intelligence, and at no point in this loop did the output matter. The consumption was the product. The sharing was the output. The essay, much like the AI it discusses, was a tool-shaped object and it worked exactly as designed.

This is, ultimately, also the story of the AI boom so far. The dominant narrative about AI is not what it has built, but the rate at which people are consuming it. The rate at which we are spending on GPU farms. The rate at which we are expensing the tools against Ramp cards.

The headlines are token budgets and GPU clusters and billion-dollar training runs and trillion-dollar infrastructure buildouts. The story is the capex.

AI is everywhere in consumption and almost nowhere in output. We are spending unprecedented sums to acquire, configure, deploy, and operate these systems, and the primary product of that spending is the experience of spending it.

A woodworker who spends six figures a year on exotic hardwoods he will never build with is not investing in output. He is investing in scrap. The wood exists so that the tools have something to touch. The shavings and scraps are the product.

Miles Grimshaw, a much better investor than I am, recently forced the idea of “token budget” into our collective consciousness. The framing was that of a compensation negotiation: token budget as a proxy for resources, for seriousness, for how much work the company expects you to do with these tools.

We have begun to talk about token consumption the way we talk about capital expenditure: as an input that scales linearly to output. More tokens, more work. Bigger budget, bigger results. This framing is so natural, so intuitive, so aligned with every other resource allocation decision a manager makes, that almost no one has stopped to ask whether the relationship between tokens consumed and value produced is a line, a curve, or a cloud.

It is, in most cases, a cloud. But the budget is real.

The problem begins when the tool-shaped object is designed to hide this from you. When the feeling of work becomes the product, sold as work itself.

Consider Farmville.

FarmVille is a command-and-control interface. No matter where you click, your farm will expand, your crops will grow, and the number will go up. The only input is your time, the direction of which is largely irrelevant. The screen fills with evidence of your effort: crops, cosmetics, and increasingly large barns.

The number goes up. This is the entire product.

The market for feeling productive is orders of magnitude larger than the market for being productive. Most people, most of the time, want to click and watch the number go up. They do not want to be told the number is fake. They will pay— in time, in attention, in actual money— to keep the number going up.

Farmville is a tool shaped object.

Tool Shaped Objects are not new. Entire product categories exist in this space. The productivity app that you configure for three weeks and then never use. The Notion workspace with fourteen linked databases tracking a life that does not require tracking. People got their bodies tattooed with Roam Research symbols in 2018, people forget this now.

These are all kanna. These are tool shaped objects. The setup is the practice. But unlike the Japanese woodworker, the user of these objects typically believes he is doing the thing the tool is shaped like, and not the thing the tool actually does.

The current generation of LLM-driven insanity — the billion dollar frameworks, the orchestration layers, the agentic workflows— is the most sophisticated tool-shaped object ever created.

You can build an agent that reads your email, summarizes the contents, drafts a response, checks the response against a style guide, routes the response through an approval chain, logs the interaction, and reports the results to a dashboard. You can watch this happen. You can watch the tokens stream. You can see the chain of thought. You can monitor the system prompt. You can adjust the temperature. You can swap the model. You can add a tool. You can add six tools. You can add a tool that calls another agent that calls a third agent that searches the web and synthesizes the results into a memo that no one will read.

The number goes up.

I have seen teams of very smart engineers build agent systems of breathtaking complexity whose primary output is the existence of the system itself. The agents run. They produce logs. The logs are analyzed by other agents. Reports are generated. Dashboards are populated. The entire apparatus hums with the unmistakable energy of work being done.

What is being done is the operation of the apparatus.

This is not to say that LLMs as such are worthless, quite the opposite. These models, at least from my view, will become very good in short order, and the careful deployment of them will have unbelievable effects on productivity the real economy.

But my narrow suggestion is that this diffusion into the real economy will take much longer, and look much different than the current run on South Bay Best Buys for Mac Minis would have you believe.

This is FarmVille at institutional scale. The quality that makes LLMs so extraordinarily effective as tool-shaped objects is their verbal fluency. Every prior tool-shaped object had to work within the constraints of its medium. FarmVille could only produce the sensation of farming. Notion could only produce the sensation of organizing. But an LLM can produce the sensation of anything.

What makes this particularly difficult to see is that LLMs are also, genuinely, tools. They do real work. The line between the tool and the tool-shaped object is not a line at all but a gradient, and the gradient shifts with every use case, every user, every prompt. You can only fail to notice when you have crossed from one side to the other.

Ask what the number is before making it go up.

Somewhere Charles Goodhart is sitting at a pub nursing a pint and smiling quietly.

I wrote something similar: "We are choosing convenience over cognition and calling it progress...When you train yourself to press the button instead of doing the work, you eventually press the wrong button." More: https://www.whitenoise.email/p/lost-in-truncation